Introduction to Coding

- Introduction to the history of mathematics and computing:

- Brief overview of the history of mathematics and how it has evolved over time.

- Discussion of how mathematics has played a key role in the development of computing, including the development of algorithms, the representation of data, and the creation of programming languages.

- Introduction to key mathematical concepts, such as binary numbers, logic gates, and Boolean algebra, and how they are used in computing.

- Introduction to programming fundamentals using JavaScript:

- Overview of what programming is and why it is important.

- Introduction to JavaScript, its syntax, and basic data types.

- Introduction to variables, functions, control structures (if-else, for loop, while loop), and arrays.

- Discussion of the DOM (Document Object Model) and how JavaScript can interact with HTML elements.

- Hands-on coding exercises to reinforce the concepts learned.

- Project setup with VSCode:

- Introduction to VSCode and its features for writing and debugging code.

- How to set up a project, create a new file, and write code in VSCode.

- How to use the integrated terminal to run and debug your code.

- Overview of extensions and how to install and use them to enhance your development experience.

Additional Resources

- Infinite Powers - Steven Strogatz

- Turings Cathedral - George Dyson

# History of Mathematics

Thousands of years of philosophy, and Mathematics led to computing as we know it today.

Throughout history there have been many advances in mathematics.

Here is a rough historical timeline of mathematics leading to the age of computing today:

- Ancient Times (3000 BC - 400 AD): Early forms of mathematics, such as arithmetic, geometry, and algebra, were developed in ancient civilizations such as Babylon, Egypt, and Greece. Arithmetic dealt with counting and basic operations such as addition, subtraction, multiplication, and division. Geometry dealt with the study of shapes, sizes, and positions of objects in space. Algebra involved the use of symbols and equations to represent and solve mathematical problems.

- Middle Ages (400 AD - 1400 AD): During the Middle Ages, mathematics continued to develop, particularly in the Islamic world, where new mathematical concepts were developed and earlier works were translated into Arabic. For example, the concept of algorithms was developed and applied to solving mathematical problems, leading to the development of algorithmic procedures for solving problems in arithmetic and algebra.

- Renaissance (1400 AD - 1700 AD): The Renaissance saw a revival of mathematics and science, with major advances made by mathematicians such as Leonardo da Vinci, Johannes Kepler, and Galileo Galilei. During this time, calculus was developed independently by Isaac Newton and Gottfried Wilhelm Leibniz. Calculus provided a mathematical tool for modeling change and analyzing dynamic systems, and was applied to fields such as physics, engineering, and economics.

- 18th and 19th Centuries (1700 AD - 1900 AD): The 18th and 19th centuries saw a rapid expansion of mathematics, with many new branches of mathematics being developed, such as number theory, probability theory, and abstract algebra. Number theory dealt with the study of integers and their properties, while probability theory provided a mathematical framework for modeling uncertainty and randomness. Abstract algebra involved the study of algebraic structures and their properties. These advances in mathematics provided a foundation for later developments in computer science.

- 20th Century (1900 AD - 2000 AD): The 20th century saw the birth of modern computing and the application of mathematical concepts to the design and implementation of computers. Alan Turing developed the concept of the Universal Turing Machine, which provided a theoretical model for the modern computer. The development of Boolean algebra by George Boole provided a mathematical foundation for digital logic and the design of computer circuits. The theory of computability, developed by Kurt Gödel, Alonzo Church, and Alan Turing, provided a mathematical framework for understanding what can and cannot be computed by a computer. These advances in mathematics laid the foundation for modern computing and the computer science field.

During the computer revolution great thinkers engineers and mathematicians were standing on the shoulders of giants and history as they created the foundation for computing as we know it today.

- 1700s: Calculus, invented by Isaac Newton and Gottfried Leibniz, provides the mathematical foundation for modeling and analyzing complex systems, including the behavior of electronic circuits. Calculus’ concept of a mathematical function allowed for the representation of input-output relationships and was crucial in the design of computer algorithms and circuits.

- 1800s: Boolean Algebra, developed by George Boole, provides the mathematical basis for binary logic and the digital representation of information in computers. Boolean Algebra’s concepts of true/false values, logical operations (AND, OR, NOT), and the manipulation of binary digits (bits) were crucial in the design of computer circuits and the creation of programming languages.

- 1900s: Alan Turing, considered the father of theoretical computer science, built upon the work of Boole and Leibniz to formalize the concepts of computability and algorithms, which became the foundation of modern computer science. Turing’s work on the theoretical machine, now known as the Turing machine, laid the foundation for the development of early computers and laid the theoretical basis for the study of algorithms and computation.

These mathematical concepts and advancements played a crucial role in the development of early computing and the work of pioneers like Alan Turing. They laid the foundation for the design of computer algorithms, circuits, and programming languages, which paved the way for the development of the modern computers we have today.

# Alan Turing

Alan Turing was a British mathematician and computer scientist who made many important contributions to the field of computing. Some of his most significant advances and concepts include:

- The Turing Machine: Turing is best known for his concept of the “universal machine,” now known as the “Turing Machine,” which is considered the theoretical basis for modern computers. The Turing Machine is a theoretical machine that can perform any calculation that can be done by a computer.

- The Turing Test: Turing proposed the Turing Test as a measure of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. The Turing Test is still widely used as a benchmark for artificial intelligence.

- Codebreaking: During World War II, Turing worked at Bletchley Park, the British government’s codebreaking center. He was a key figure in breaking the German Enigma code, which was a significant factor in the Allied victory in the war.

- The Idea of Computability: Turing is credited with developing the idea of computability, which is the study of what can and cannot be computed. He showed that there are certain mathematical problems that are not computable, which became the basis for the theory of incompleteness.

- The Concept of the Stored-Program Computer: Turing is considered one of the pioneers of the stored-program computer, which is a computer architecture that stores instructions and data in memory, rather than having them hard-wired into the machine.

These contributions and others made by Alan Turing were foundational to the development of computing as we know it today, and he is widely regarded as one of the most important figures in the history of computing.

Alan Turing worked with several other individuals and teams who made important contributions to the field of computing. Some of the notable people who worked with or were associated with Turing include:

- Max Newman: Max Newman was Turing’s supervisor at Bletchley Park during World War II. He was a mathematician and computer scientist who worked on breaking the German Enigma code, and he was one of the first people to realize the potential of the Colossus, an early computer developed at Bletchley Park.

- John von Neumann: John von Neumann was a Hungarian mathematician and computer scientist who worked on the Manhattan Project and later made important contributions to the field of computer architecture. He is best known for his concept of the stored-program computer, which is a computer architecture that stores instructions and data in memory, rather than having them hard-wired into the machine.

- Alonzo Church: Alonzo Church was an American logician and mathematician who worked on the theory of computability, which is the study of what can and cannot be computed. He independently developed the concept of the lambda calculus, which is a formal system for expressing mathematical functions, and is considered one of the foundations of the theory of computability.

- Claude Shannon: Claude Shannon was an American mathematician and electrical engineer who is considered the father of modern digital circuit design. He made important contributions to the field of information theory and developed the concept of entropy, which is a measure of the amount of uncertainty in a signal.

- Grace Hopper: Grace Hopper was an American computer scientist who was one of the first programmers of the Harvard Mark I computer and later developed the COBOL programming language. She was a key figure in the development of early computer software and is credited with popularizing the term “debugging.”

These individuals and others worked with Alan Turing or made contributions to the field of computing in the same era, and their work helped to establish the foundations of modern computing.

# Boolean Logic

Boolean logic is a mathematical system that uses binary values, typically represented as “true” or “false”, to represent and manipulate information. It provides the foundation for digital information representation in computers.

# Basic Operations

The following are the basic operations and symbols used in Boolean logic:

# AND (∧)

The AND operation returns “true” if both input values are “true”. Otherwise, it returns “false”.

vbnet

`Example: - true ∧ true = true - true ∧ false = false

# OR (∨)

The OR operation returns “true” if at least one of the input values is “true”. Otherwise, it returns “false”.

vbnet

Example: - true ∨ true = true - true ∨ false = true - false ∨ false = false

# NOT (¬)

The NOT operation negates the input value. If the input value is “true”, the NOT operation returns “false”. If the input value is “false”, the NOT operation returns “true”.

vbnet

Example: - ¬ true = false - ¬ false = true

# XOR (⊕)

The XOR (exclusive OR) operation returns “true” if exactly one of the input values is “true”. Otherwise, it returns “false”.

vbnet

Example: - true ⊕ true = false - true ⊕ false = true - false ⊕ false = false

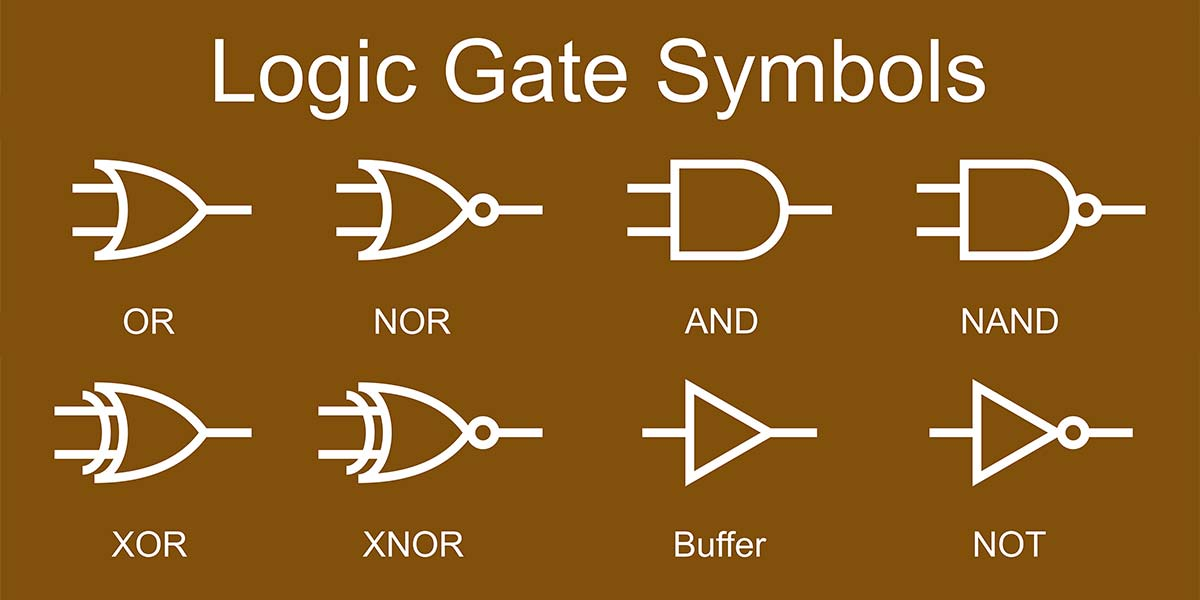

These operations can be combined to form complex expressions and used to represent and manipulate digital information in computers. For example, the combination of these operations can be used to implement the basic logic gates (AND, OR, NOT) in computer circuits and the creation of programming constructs such as if-else statements.

- AND: Returns “true” if both inputs are “true”, otherwise returns “false”.

- OR: Returns “true” if either of the inputs is “true”, otherwise returns “false”.

- NOT: Negates the input, returns “false” if the input is “true” and vice versa.

- NAND: The negation of AND, returns “false” if both inputs are “true”, otherwise returns “true”.

- NOR: The negation of OR, returns “false” if either of the inputs is “true”, otherwise returns “true”.

- XOR: Returns “true” if exactly one of the inputs is “true”, otherwise returns “false”.

- XNOR: The negation of XOR, returns “true” if both inputs are either “true” or “false”, otherwise returns “false”.

# Datatypes and Variables

Data types in programming are ways to classify and organize different types of data. Some common data types in programming include:

- Integer: Whole numbers, either positive or negative.

- Float: Real numbers with decimal points.

- String: Sequence of characters, typically used to represent text.

- Boolean: A binary data type that can only have two values: “true” or “false”.

- Array: A collection of elements of the same or different data types.

- Dictionary/Map: An associative array that maps keys to values.

- Object: A user-defined data type, typically used to encapsulate related data and functionality.

The data type of a variable determines the kind of value it can hold and the operations that can be performed on it. Different programming languages have different sets of built-in data types, and some languages also allow the creation of custom data types.

# Operators

Operators in programming are symbols that perform operations on values and variables. They allow us to manipulate and make decisions based on the values of variables. Here are some common types of operators in programming:

- Arithmetic operators: Perform basic arithmetic operations such as addition, subtraction, multiplication, division, etc.

- Comparison operators: Compare values and return a Boolean value based on the result of the comparison (e.g. “greater than”, “less than”, “equal to”).

- Logical operators: Perform Boolean operations on variables or values (e.g. AND, OR, NOT).

- Assignment operators: Assign values to variables (e.g. “=”).

- Bitwise operators: Perform operations on individual bits of binary data.

- Ternary operator: A shorthand way to write an “if-else” statement using a single line of code.

- Miscellaneous operators: There are other types of operators such as the conditional operator, increment/decrement operator, etc.

The specific operators available in a programming language and their syntax may vary, but their overall purpose is to provide a way to manipulate and make decisions based on the values of variables.

# Control Flow

Control flow in programming refers to the order in which the statements in a program are executed. It determines which statements get executed under what conditions, and which statements get skipped. There are several control flow constructs in most programming languages, including:

- Conditional statements (e.g. if-else): Execute a block of code only if a certain condition is met.

- Loops (e.g. for, while): Repeat a block of code multiple times until a certain condition is met.

- Jump statements (e.g. break, continue, return): Transfer control flow to another part of the program, either by exiting a loop prematurely or by returning from a function.

- Switch/case statements: Select a block of code to execute based on the value of a variable.

These control flow constructs allow us to write programs that can make decisions and repeat actions, making it possible to write more complex algorithms. The specific syntax for control flow statements may vary between programming languages, but the basic concepts remain the same.

# Functions

Functions in programming are blocks of reusable code that perform a specific task. They are defined by a name and a set of input parameters, and can return a value or multiple values. Functions allow us to organize our code into smaller, more manageable chunks, and to encapsulate complex logic into self-contained units.

Here are some benefits of using functions in programming:

- Reusability: Functions can be reused across multiple parts of a program, reducing code duplication and improving maintainability.

- Abstraction: Functions hide the implementation details of a task, allowing the rest of the program to interact with the function at a higher level of abstraction.

- Modularity: Functions provide a way to split a large program into smaller, more manageable parts, making it easier to debug and understand the code.

- Readability: Functions can make code easier to read and understand by breaking it down into smaller, self-contained parts.

In most programming languages, functions are defined using the “def” keyword, followed by the function name, input parameters, and the code to be executed when the function is called. Functions can be called from anywhere in the program, and can optionally return a value to the calling code.

# Arrays and Other Data Structures

An array is a data structure in programming that holds a collection of elements, typically of the same data type. The elements can be accessed by their index, which is an integer value representing their position within the array. Arrays are used to store and organize data in a convenient, index-based way.

Similar data structures in programming include:

- Lists: A dynamic collection of elements, which can be of different data types and can grow or shrink in size at runtime.

- Stacks: A linear data structure where elements are added and removed from the same end, following the “last in, first out” (LIFO) principle.

- Queues: A linear data structure where elements are added to one end and removed from the other, following the “first in, first out” (FIFO) principle.

The hardware level is sometimes important in arrays and similar data structures because the way the data is stored and accessed in memory can have a significant impact on performance. For example, arrays stored in contiguous memory locations can be accessed more efficiently than arrays stored in non-contiguous locations, because they can be accessed with a single memory reference. On the other hand, dynamic arrays that can grow and shrink in size at runtime often use a more complex memory management strategy, such as linked lists, to avoid the overhead of constantly moving elements around in memory.

In general, arrays and similar data structures in programming provide a way to store and organize data in memory, and their specific implementation can vary depending on the requirements of the application and the capabilities of the hardware.

# Objects

Objects are a fundamental concept in object-oriented programming (OOP) and represent instances of classes, which are essentially user-defined data types. An object is a self-contained unit that contains data and behavior, which can be manipulated and interacted with through methods and properties.

An object can be thought of as a real-world entity, such as a person, a car, or a bank account, that has specific attributes (e.g. name, age, balance) and can perform specific actions (e.g. drive, deposit, withdraw).

Here are some benefits of using objects in programming:

- Encapsulation: Objects encapsulate data and behavior, providing a way to structure and organize code into meaningful, reusable units.

- Abstraction: Objects can provide an abstract representation of real-world entities, hiding the details of their implementation and making it easier to reason about and work with the data.

- Reusability: Objects can be reused across multiple parts of a program, reducing code duplication and improving maintainability.

- Polymorphism: Objects can be used in a generic manner, allowing them to be treated as instances of a common base class, regardless of their specific implementation.

In most object-oriented programming languages, objects are created by instantiating classes, which are defined using a class definition syntax. The class defines the structure of the object, including its properties and methods, and the object is created by calling a constructor, which sets the initial state of the object. The object can then be manipulated and interacted with through its methods and properties, which provide a way to access and modify its data and behavior.

# Input/Output

I/O (Input/Output) refers to the process of reading and writing data to and from external sources, such as files, databases, networks, or other devices. I/O is an essential aspect of most programming and is used to store and retrieve data, interact with users, and communicate with other systems.

Here are some examples of I/O in programming:

- Reading from a file: Opening a file, reading its contents, and storing the data in memory for processing.

- Writing to a file: Creating a new file or overwriting an existing file with new data.

- Console input/output: Reading input from the user through the keyboard or other input device, and displaying output on the screen.

- Network communication: Sending and receiving data over a network, such as a web API or a socket connection.

- Database access: Reading and writing data to a database, such as a SQL database or a NoSQL database.

I/O is typically performed through libraries or APIs provided by the programming language or operating system, which provide a convenient and standardized way to access external resources. For example, in Python, you can use the open() function to open a file and read its contents, and the write() method to write data to a file.

The way I/O is performed can have a significant impact on the performance and efficiency of a program, particularly when working with large amounts of data. For example, reading and writing data in small, manageable chunks can help to reduce memory usage and improve overall performance. Additionally, using buffered I/O, which stores data in memory before writing it to disk, can improve I/O performance by reducing the number of disk accesses.

# Debugging

Debugging is the process of finding and fixing errors or bugs in a computer program. Debugging is an essential part of software development and helps to ensure that a program behaves as intended.

Debugging can involve several techniques, including:

- Examining error messages: Reviewing error messages and stack traces generated by the program to identify the source of the problem.

- Stepping through code: Using a debugger to step through the code line by line, examining the values of variables and other state information to understand how the program is executing.

- Printing debug information: Adding print statements or logging information to the code to track the execution of the program and inspect its behavior.

- Using breakpoints: Setting breakpoints in the code, which cause the program to stop executing at a specific line or location, so that the state of the program can be examined.

- Replicating the issue: Recreating the issue in a controlled environment to better understand the root cause and determine the most effective solution.

Debugging can be a time-consuming process and requires a strong understanding of the program and its requirements. Effective debugging requires a systematic approach, patience, and the ability to think logically and systematically to identify the cause of the problem.

Most programming environments provide integrated debugging tools, such as integrated development environment (IDE) debugger, which can help to automate and simplify the debugging process. The use of automated testing and other quality assurance techniques can also help to reduce the need for manual debugging and improve the reliability of a program.

# Algorithms

An algorithm is a step-by-step procedure for solving a problem in a finite amount of time. In computer programming, an algorithm is a set of instructions that a computer can follow to perform a specific task.

Algorithms are the building blocks of computer programs and are used in a wide range of applications, from simple tasks such as sorting data to more complex tasks such as natural language processing.

An algorithm must have the following properties:

- Input: Accept input data

- Definiteness: Have a clear and well-defined set of steps

- Finiteness: Terminate after a finite number of steps

- Correctness: Produce correct results for all inputs

There are many types of algorithms, including:

- Sorting algorithms: Used to sort data in a particular order, such as ascending or descending. Examples include QuickSort, MergeSort, and BubbleSort.

- Search algorithms: Used to search for a specific item in a data structure. Examples include Linear Search and Binary Search.

- Graph algorithms: Used to solve problems involving graphs, such as finding the shortest path between two nodes. Examples include Dijkstra’s algorithm and Depth-First Search.

- Divide-and-conquer algorithms: Solve problems by dividing them into smaller sub-problems and solving each sub-problem individually. Examples include MergeSort and the Fast Fourier Transform.

- Dynamic programming algorithms: Solve problems by breaking them down into smaller sub-problems and storing the results of sub-problems to avoid redundant work. Examples include the Fibonacci sequence and the Longest Common Subsequence problem.

The choice of algorithm will depend on the specific requirements of a problem and the desired performance characteristics, such as time complexity, memory usage, and stability.

# Design Patterns

Design patterns are reusable solutions to common problems that occur in software design. They provide a structured approach to solving problems and can help to improve code quality, reduce development time, and increase the maintainability of a codebase.

There are several categories of design patterns, including:

- Creational patterns: Concerned with object creation mechanisms, trying to create objects in a manner suitable to the situation. Examples include the Factory Method, Abstract Factory, Singleton, Builder, and Prototype patterns.

- Structural patterns: Concerned with object composition and the relationships between objects. Examples include the Adapter, Bridge, Composite, Decorator, Facade, Flyweight, and Proxy patterns.

- Behavioral patterns: Concerned with communication between objects and the responsibility of objects. Examples include the Chain of Responsibility, Command, Interpreter, Iterator, Mediator, Memento, Observer, State, Strategy, Template Method, and Visitor patterns.

Each design pattern provides a specific solution to a common problem and can be applied in different scenarios. The choice of a particular design pattern will depend on the specific requirements of a project and the design goals that need to be achieved.

Design patterns are not specific to a particular programming language and can be applied in any language that supports object-oriented programming. The concepts and ideas behind design patterns can be adapted and modified to meet the needs of a specific project or programming environment.

# Javascript

- Variables: used to store data, such as numbers or strings. Example:

let x = 42; - Data types: different types of data, such as strings, numbers, and booleans. Example:

"Hello World"is a string,42is a number, andtrueis a boolean. - Operators: symbols that perform operations on variables and values, such as addition

+, subtraction-, and comparison==. Example:x + 5would evaluate to47. - Conditional statements: control the flow of the program based on certain conditions. Example:

if (x > 40) { console.log("x is greater than 40"); } - Arrow Functions: concise syntax for creating functions. Example:

const add = (a, b) => a + b; - Arrays: collections of data stored in a single variable. Example:

const fruits = ["apple", "banana", "cherry"]; - Objects: collections of data and functions stored in a single variable. Example:

const person = { firstName: "John", lastName: "Doe", age: 30 };

# Homework

Complete the following exercises to get familiar with Javscript.

- Turn in a screenshot of the completed lessons